Companies in search of to harness the facility of AI want custom-made fashions tailor-made to their particular {industry} wants.

NVIDIA AI Foundry is a service that allows enterprises to make use of information, accelerated computing and software program instruments to create and deploy customized fashions that may supercharge their generative AI initiatives.

Simply as TSMC manufactures chips designed by different corporations, NVIDIA AI Foundry supplies the infrastructure and instruments for different corporations to develop and customise AI fashions — utilizing DGX Cloud, basis fashions, NVIDIA NeMo software program, NVIDIA experience, in addition to ecosystem instruments and assist.

The important thing distinction is the product: TSMC produces bodily semiconductor chips, whereas NVIDIA AI Foundry helps create customized fashions. Each allow innovation and hook up with an unlimited ecosystem of instruments and companions.

Enterprises can use AI Foundry to customise NVIDIA and open group fashions, together with the brand new Llama 3.1 assortment, in addition to NVIDIA Nemotron, CodeGemma by Google DeepMind, CodeLlama, Gemma by Google DeepMind, Mistral, Mixtral, Phi-3, StarCoder2 and others.

Trade Pioneers Drive AI Innovation

Trade leaders Amdocs, Capital One, Getty Pictures, KT, Hyundai Motor Firm, SAP, ServiceNow and Snowflake are among the many first utilizing NVIDIA AI Foundry. These pioneers are setting the stage for a brand new period of AI-driven innovation in enterprise software program, know-how, communications and media.

“Organizations deploying AI can acquire a aggressive edge with customized fashions that incorporate {industry} and enterprise information,” stated Jeremy Barnes, vp of AI Product at ServiceNow. “ServiceNow is utilizing NVIDIA AI Foundry to fine-tune and deploy fashions that may combine simply inside clients’ present workflows.”

The Pillars of NVIDIA AI Foundry

NVIDIA AI Foundry is supported by the important thing pillars of basis fashions, enterprise software program, accelerated computing, knowledgeable assist and a broad associate ecosystem.

Its software program contains AI basis fashions from NVIDIA and the AI group in addition to the entire NVIDIA NeMo software program platform for fast-tracking mannequin improvement.

The computing muscle of NVIDIA AI Foundry is NVIDIA DGX Cloud, a community of accelerated compute sources co-engineered with the world’s main public clouds — Amazon Internet Companies, Google Cloud and Oracle Cloud Infrastructure. With DGX Cloud, AI Foundry clients can develop and fine-tune customized generative AI purposes with unprecedented ease and effectivity, and scale their AI initiatives as wanted with out vital upfront investments in {hardware}. This flexibility is essential for companies seeking to keep agile in a quickly altering market.

If an NVIDIA AI Foundry buyer wants help, NVIDIA AI Enterprise consultants are available to assist. NVIDIA consultants can stroll clients by every of the steps required to construct, fine-tune and deploy their fashions with proprietary information, making certain the fashions tightly align with their enterprise necessities.

NVIDIA AI Foundry clients have entry to a worldwide ecosystem of companions that may present a full vary of assist. Accenture, Deloitte, Infosys, Tata Consultancy Companies and Wipro are among the many NVIDIA companions that provide AI Foundry consulting providers that embody design, implementation and administration of AI-driven digital transformation initiatives. Accenture is first to supply its personal AI Foundry-based providing for customized mannequin improvement, the Accenture AI Refinery framework.

Moreover, service supply companions akin to Information Monsters, Quantiphi, Slalom and SoftServe assist enterprises navigate the complexities of integrating AI into their present IT landscapes, making certain that AI purposes are scalable, safe and aligned with enterprise targets.

Prospects can develop NVIDIA AI Foundry fashions for manufacturing utilizing AIOps and MLOps platforms from NVIDIA companions, together with ActiveFence, AutoAlign, Cleanlab, DataDog, Dataiku, Dataloop, DataRobot, Deepchecks, Domino Information Lab, Fiddler AI, Giskard, New Relic, Scale, Tumeryk and Weights & Biases.

Prospects can output their AI Foundry fashions as NVIDIA NIM inference microservices — which embody the customized mannequin, optimized engines and a typical API — to run on their most well-liked accelerated infrastructure.

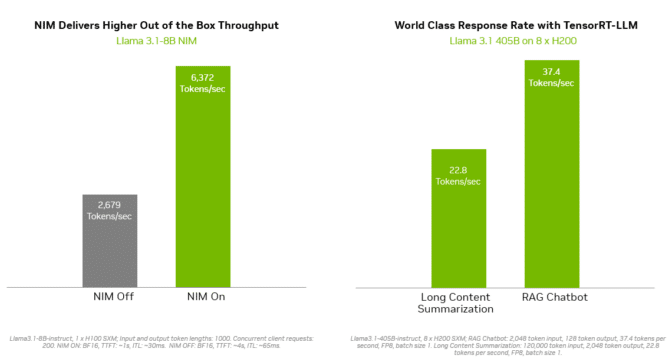

Inferencing options like NVIDIA TensorRT-LLM ship improved effectivity for Llama 3.1 fashions to attenuate latency and maximize throughput. This allows enterprises to generate tokens sooner whereas decreasing whole value of working the fashions in manufacturing. Enterprise-grade assist and safety is offered by the NVIDIA AI Enterprise software program suite.

The broad vary of deployment choices contains NVIDIA-Licensed Methods from international server manufacturing companions together with Cisco, Dell Applied sciences, Hewlett Packard Enterprise, Lenovo and Supermicro, in addition to cloud cases from Amazon Internet Companies, Google Cloud and Oracle Cloud Infrastructure.

Moreover, Collectively AI, a number one AI acceleration cloud, right now introduced it can allow its ecosystem of over 100,000 builders and enterprises to make use of its NVIDIA GPU-accelerated inference stack to deploy Llama 3.1 endpoints and different open fashions on DGX Cloud.

“Each enterprise working generative AI purposes desires a sooner person expertise, with larger effectivity and decrease value,” stated Vipul Ved Prakash, founder and CEO of Collectively AI. “Now, builders and enterprises utilizing the Collectively Inference Engine can maximize efficiency, scalability and safety on NVIDIA DGX Cloud.”

NVIDIA NeMo Speeds and Simplifies Customized Mannequin Growth

With NVIDIA NeMo built-in into AI Foundry, builders have at their fingertips the instruments wanted to curate information, customise basis fashions and consider efficiency. NeMo applied sciences embody:

- NeMo Curator is a GPU-accelerated data-curation library that improves generative AI mannequin efficiency by making ready large-scale, high-quality datasets for pretraining and fine-tuning.

- NeMo Customizer is a high-performance, scalable microservice that simplifies fine-tuning and alignment of LLMs for domain-specific use instances.

- NeMo Evaluator supplies computerized evaluation of generative AI fashions throughout educational and customized benchmarks on any accelerated cloud or information heart.

- NeMo Guardrails orchestrates dialog administration, supporting accuracy, appropriateness and safety in good purposes with massive language fashions to supply safeguards for generative AI purposes.

Utilizing the NeMo platform in NVIDIA AI Foundry, companies can create customized AI fashions which are exactly tailor-made to their wants. This customization permits for higher alignment with strategic targets, improved accuracy in decision-making and enhanced operational effectivity. As an example, corporations can develop fashions that perceive industry-specific jargon, adjust to regulatory necessities and combine seamlessly with present workflows.

“As a subsequent step of our partnership, SAP plans to make use of NVIDIA’s NeMo platform to assist companies to speed up AI-driven productiveness powered by SAP Enterprise AI,” stated Philipp Herzig, chief AI officer at SAP.

Enterprises can deploy their customized AI fashions in manufacturing with NVIDIA NeMo Retriever NIM inference microservices. These assist builders fetch proprietary information to generate educated responses for his or her AI purposes with retrieval-augmented technology (RAG).

“Secure, reliable AI is a non-negotiable for enterprises harnessing generative AI, with retrieval accuracy instantly impacting the relevance and high quality of generated responses in RAG techniques,” stated Baris Gultekin, Head of AI, Snowflake. “Snowflake Cortex AI leverages NeMo Retriever, a part of NVIDIA AI Foundry, to additional present enterprises with simple, environment friendly, and trusted solutions utilizing their customized information.”

Customized Fashions Drive Aggressive Benefit

One of many key benefits of NVIDIA AI Foundry is its means to handle the distinctive challenges confronted by enterprises in adopting AI. Generic AI fashions can fall wanting assembly particular enterprise wants and information safety necessities. Customized AI fashions, alternatively, supply superior flexibility, adaptability and efficiency, making them supreme for enterprises in search of to achieve a aggressive edge.

Study extra about how NVIDIA AI Foundry permits enterprises to spice up productiveness and innovation.