Amazon Internet Providers and NVIDIA will deliver the most recent generative AI applied sciences to enterprises worldwide.

Combining AI and cloud computing, NVIDIA founder and CEO Jensen Huang joined AWS CEO Adam Selipsky Tuesday on stage at AWS re:Invent 2023 on the Venetian Expo Middle in Las Vegas.

Selipsky mentioned he was “thrilled” to announce the enlargement of the partnership between AWS and NVIDIA with extra choices that can ship superior graphics, machine studying and generative AI infrastructure.

The 2 introduced that AWS would be the first cloud supplier to undertake the most recent NVIDIA GH200 NVL32 Grace Hopper Superchip with new multi-node NVLink know-how, that AWS is bringing NVIDIA DGX Cloud to AWS, and that AWS has built-in a few of NVIDIA’s hottest software program libraries.

Huang began the dialog by highlighting the combination of key NVIDIA libraries with AWS, encompassing a variety from NVIDIA AI Enterprise to cuQuantum to BioNeMo, catering to domains like knowledge processing, quantum computing and digital biology.

The partnership opens AWS to thousands and thousands of builders and the almost 40,000 firms who’re utilizing these libraries, Huang mentioned, including that it’s nice to see AWS increase its cloud occasion choices to incorporate NVIDIA’s new L4, L40S and, quickly, H200 GPUs.

Selipsky then launched the AWS debut of the NVIDIA GH200 Grace Hopper Superchip, a big development in cloud computing, and prompted Huang for additional particulars.

“Grace Hopper, which is GH200, connects two revolutionary processors collectively in a extremely distinctive manner,” Huang mentioned. He defined that the GH200 connects NVIDIA’s Grace Arm CPU with its H200 GPU utilizing a chip-to-chip interconnect referred to as NVLink, at an astonishing one terabyte per second.

Every processor has direct entry to the high-performance HBM and environment friendly LPDDR5X reminiscence. This configuration ends in 4 petaflops of processing energy and 600GB of reminiscence for every superchip.

AWS and NVIDIA join 32 Grace Hopper Superchips in every rack utilizing a brand new NVLink change. Every 32 GH200 NVLink-connected node could be a single Amazon EC2 occasion. When these are built-in with AWS Nitro and EFA networking, prospects can join GH200 NVL32 situations to scale to 1000’s of GH200 Superchips

“With AWS Nitro, that turns into mainly one large digital GPU occasion,” Huang mentioned.

The mix of AWS experience in extremely scalable cloud computing plus NVIDIA innovation with Grace Hopper will make this a tremendous platform that delivers the best efficiency for advanced generative AI workloads, Huang mentioned.

“It’s nice to see the infrastructure, nevertheless it extends to the software program, the providers and all the opposite workflows that they’ve,” Selipsky mentioned, introducing NVIDIA DGX Cloud on AWS.

This partnership will deliver in regards to the first DGX Cloud AI supercomputer powered by the GH200 Superchips, demonstrating the ability of AWS’s cloud infrastructure and NVIDIA’s AI experience.

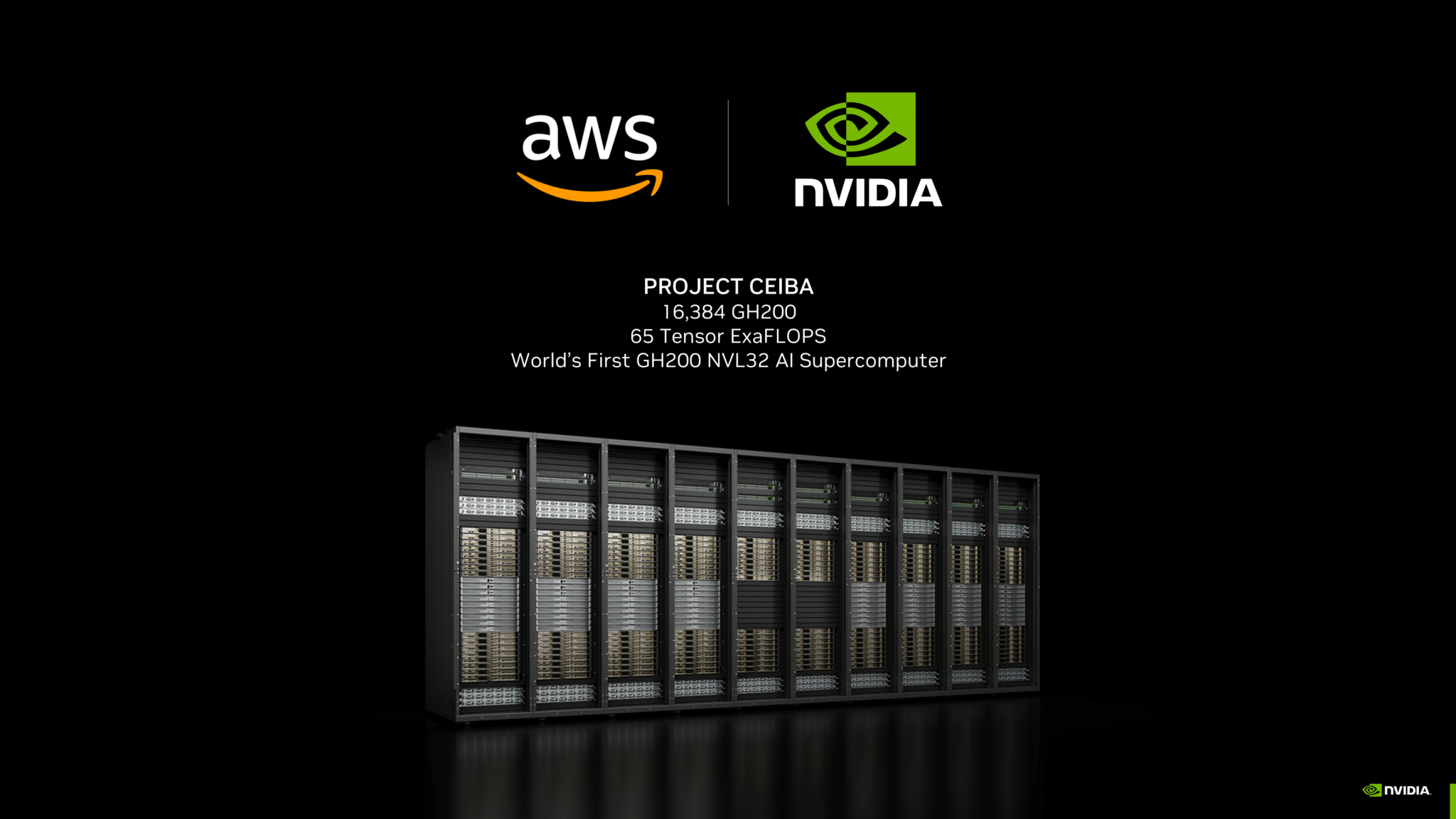

Following up, Huang introduced that this new DGX Cloud supercomputer design in AWS, codenamed Undertaking Ceiba, will function NVIDIA’s latest AI supercomputer as effectively, for its personal AI analysis and growth.

Named after the majestic Amazonian Ceiba tree, the Undertaking Ceiba DGX Cloud cluster incorporates 16,384 GH200 Superchips to realize 65 exaflops of AI processing energy, Huang mentioned.

Ceiba would be the world’s first GH200 NVL32 AI supercomputer constructed and the most recent AI supercomputer in NVIDIA DGX Cloud, Huang mentioned.

Huang described Undertaking Ceiba AI supercomputer as “completely unimaginable,” saying will probably be capable of cut back the coaching time of the biggest language fashions by half.

NVIDIA’s AI engineering groups will use this new supercomputer in DGX Cloud to advance AI for graphics, LLMs, picture/video/3D technology, digital biology, robotics, self-driving vehicles, Earth-2 local weather prediction and extra, Huang mentioned.

“DGX is NVIDIA’s cloud AI manufacturing facility,” Huang mentioned, noting that AI is now key to doing NVIDIA’s personal work in every part from laptop graphics to creating digital biology fashions to robotics to local weather simulation and modeling.

“DGX Cloud can be our AI manufacturing facility to work with enterprise prospects to construct customized AI fashions,” Huang mentioned. “They create knowledge and area experience; we deliver AI know-how and infrastructure.”

As well as, Huang additionally introduced that AWS will probably be bringing 4 Amazon EC2 situations primarily based on the NVIDIA GH200 NVL, H200, L40S, L4 GPUs, coming to market early subsequent 12 months.

Selipsky wrapped up the dialog by asserting that GH200-based situations and DGX Cloud will probably be obtainable on AWS within the coming 12 months.

You possibly can catch the dialogue and Selipsky’s whole keynote on AWS’s YouTube channel.